Alertmanager 配置终极指南:从“邪道”到正规军

Prometheus Operator 的 AlertmanagerConfig 死活不生效时,我选择了对加密配置下手...

问题背景

部署 Prometheus Operator 后,精心配置的 AlertmanagerConfig 资源死活不生效。在无数次调试无果后,我决定绕过 Operator,直接对加密的默认配置动手——这是一条邪修之路,但效果立竿见影!

邪道方案:直捣黄龙

1.获取加密配置复制

kubectl get secret alertmanager-rancher-monitoring-alertmanager-generated \

-n cattle-monitoring-system -o yaml > secret.yaml1.2.

复制

# 安装 yq 工具

wget https://github.com/mikefarah/yq/releases/download/v4.25.1/yq_linux_amd64 -O /usr/local/bin/yq

chmod +x /usr/local/bin/yq

# 解密 alertmanager 配置

echo "$(yq eval .data."alertmanager.yaml.gz" secret.yaml)" | base64 -d | gzip -d > alertmanager.yaml

# 解密模板文件

echo "$(yq eval .data."rancher_defaults.tmpl" secret.yaml)" | base64 -d > rancher_defaults.tmpl1.2.3.4.5.6.7.

复制

global:

resolve_timeout: 5m

smtp_smarthost: smtp.qq.com:465

smtp_from: xxxx@qq.com

smtp_auth_username: xxxx@qq.com

smtp_auth_password: xxxxxxx

smtp_require_tls: false

route:

receiver: "k8s-alarm"

group_by: [alertname]

routes:

- receiver: "null"

matchers:

- alertname = "Watchdog"

group_wait: 30s

group_interval: 5m

repeat_interval: 12h

receivers:

- name: "k8s-alarm"

email_configs:

- to: test@gmail.cn

send_resolved: true

- name: "null"

templates:

- /etc/alertmanager/config/*.tmpl1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.

复制

# 压缩配置

gzip -c alertmanager.yaml > alertmanager.yaml.gz

# Base64 编码

ALERTMANAGER_CONFIG=$(base64 -w0 alertmanager.yaml.gz)

TEMPLATE_CONFIG=$(base64 -w0 rancher_defaults.tmpl)

# 生成新 Secret

yq eval ".data.\"alertmanager.yaml.gz\" = \"$ALERTMANAGER_CONFIG\" |

.data.\"rancher_defaults.tmpl\" = \"$TEMPLATE_CONFIG\"" secret.yaml > updated-secret.yaml

# 修改 Secret 名称

sed -i s/name: alertmanager-.*/name: alertmanager-main/ updated-secret.yaml

# 应用配置

kubectl apply -f updated-secret.yaml -n cattle-monitoring-system1.2.3.4.5.6.7.8.9.10.11.12.

复制

# 修改 volumes 配置

volumes:

- name: config-volume

secret:

secretName: alertmanager-main # 替换默认值1.2.3.4.5.

图片

图片

图片

图片

图片

图片

警告:此方案虽快但险,Operator 升级可能导致配置被覆盖!

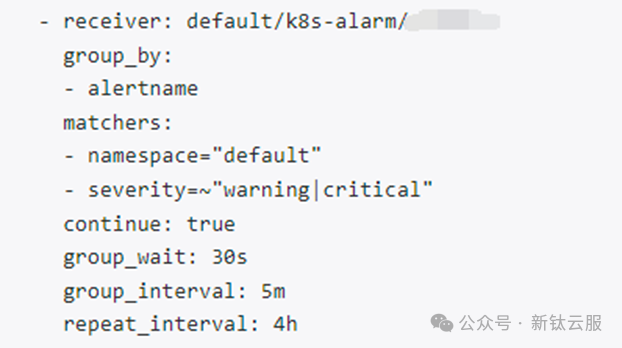

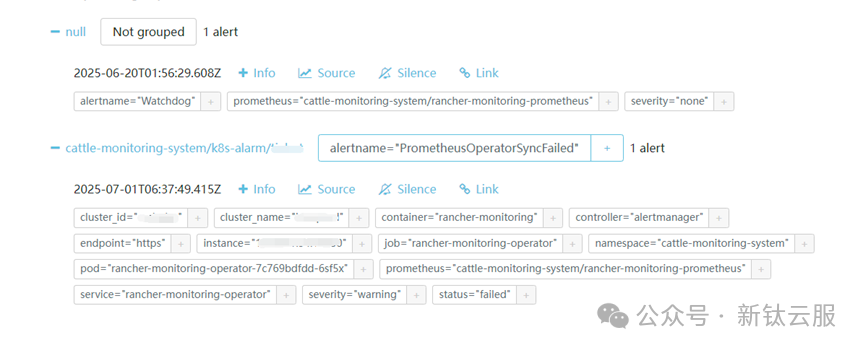

正规军方案:优雅之道

1.配置告警接收器和路由复制

# k8s-alarm.yaml

apiVersion: monitoring.coreos.com/v1alpha1

kind: AlertmanagerConfig

metadata:

name: k8s-alarm

namespace: test

spec:

receivers:

- name: tialert

webhookConfigs:

- url: https://your-webhook-url

sendResolved: true

route:

groupBy: [alertname]

groupInterval: 5m

groupWait: 30s

matchers:

- name: severity

value: "warning|critical"

regex: true

receiver: tialert

repeatInterval: 4h1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.

复制

# null.yaml

apiVersion: monitoring.coreos.com/v1alpha1

kind: AlertmanagerConfig

metadata:

name: silence-watchdog

namespace: cattle-monitoring-system

spec:

receivers:

- name: null-receiver

route:

matchers:

- name: alertname

value: "Watchdog"

receiver: null-receiver1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.

复制

# app-alert.yaml

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

name: app-backend-alerts

namespace: test

labels:

prometheus: rancher-monitoring

role: alert-rules

spec:

groups:

- name: app-backend

rules:

- alert: HighRequestRate

expr: |

sum(rate(http_requests_total{job="app-backend"}[5m])) by (service) > 100

for: 10m

labels:

severity: critical

annotations:

summary: "High request rate on {{ $labels.service }}"

description: "Request rate is {{ $value }} per second"1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.

总结对比

图片

图片

选择建议:调试阶段可用“邪道”快速验证,生产环境务必使用正规方案!

无论是“邪道”还是“正规军”,最终目的都是让告警系统稳定、可靠、可控。调试阶段,适当“走捷径”可以快速验证思路,但千万别让临时方案变成长期债务。真正的运维高手,不是不走捷径,而是知道什么时候该回头,把“邪修”的经验,沉淀为“正道”的规范。

THE END